The Impact of AI Chatbots on Chiropractic Practices: Top Insights and Concerns

ChiroUp recently surveyed 150 DCs to shed light on their utilization of Chatbots like ChatGPT® and Google Bard®. In this blog, we delve into the most common concerns voiced by current Chatbot users. From issues of accuracy and citations to compliance with HIPAA regulations and SEO implications, we examine the multifaceted landscape surrounding using an AI Chatbot in chiropractic practice.

In less than 10 minutes, you can review our essential advice, including a tutorial video, to make the most of this game-changing technology while avoiding the potential pitfalls.

-

Artificial Intelligence, commonly called AI, is a branch of computer science that focuses on creating intelligent machines capable of performing tasks that typically require human intelligence. It involves developing algorithms and systems to analyze data, learn from it, and make decisions or solve problems, simulating human-like cognitive abilities such as reasoning, problem-solving, and learning.

Large Language Model, or LLM, refers to a sophisticated artificial intelligence system that has been trained on a vast amount of text data to understand and generate human-like language.

Chatbots, short for chat robots, are computer programs that utilize a large language model (LLM) powered by artificial intelligence (AI) and natural language processing (NLP) techniques to engage in conversations with users. It can simulate human-like interactions, understand and respond to natural language inputs, provide information, answer queries, and perform tasks based on its extensive knowledge and understanding of various topics.

ChatGPT®, ChatGPT, a chatbot developed by the parent company OpenAI, was launched to the public in November 2022 and was created by a team of researchers and engineers led by the founders Elon Musk and Sam Altman. The platform has both free and paid versions.

“Prompt” refers to a user's initial message or input to initiate a conversation or request a specific action or information from the chatbot. It serves as the starting point for the chatbot to generate a response or carry out a task based on the user's query or instruction. The quality of the prompt has a significant impact on the quality of the answer.

How Many Chiropractors Use An AI Chatbot?

ChiroUp recently surveyed 150 of their network’s progressive, evidence-based DCs and found that nearly half have used an AI chatbot, with approximately one-third being at least occasional users of this game-changing technology.

Why Do Some Chiropractors Not Use AI Chatbots?

Unsure of the need and benefits

Unfamiliar or untrained on the platform

Uncertain about tracking and data

Ethical concerns, including impact on the workforce

Despite their concerns, most current non-adopters indicated they would be willing to consider chatbot use in the future. So your ChiroUp team created this short tutorial video highlighting how to use a chatbot and some examples of tasks that chatbots can streamline.

Have you found other practical tasks that chatbots can expedite? If so, email me at Tim@ChiroUp.com. We’ll include your advice in our upcoming podcast for Practical AI Chatbot Applications in Chiropractic Practice.

BTW- if you’re not yet following our podcast…Go listen to the latest episode of the Mic’d Up with ChiroUp Podcast on Apple Podcasts or Spotify.

AI will continue to populate the business and healthcare landscapes, so be prepared.

According to a recent Salesforce study, 67% of senior IT leaders actively advocate for integrating generative AI throughout their organizations within the next 18 months, with a significant one-third of them ranking it as their highest priority. However, amidst this drive for adoption, most leaders harbor concerns about potential implications, including reservations about the accuracy of generative AI outputs and security/ compliance issues. (1,2)

Not surprisingly, the chiropractors we surveyed expressed similar concerns. This blog details those concerns and tips to help avoid problems.

The 5 Top Concerns Of Chiropractic AI ChatBot Users

False, outdated, or inaccurate information

Lack of ability to incorporate specialized/ nuanced language

Incorrect citations and references

HIPAA non-compliance

Concerns that AI-generated text may lower SEO

1. False Information

“If it's on the internet, it must be true!” - No one… ever

The most significant concern regarding chatbot-generated content is that it could be inaccurate. And that concern is legitimate, as highlighted by a Google senior vice president:

"This kind of artificial intelligence we're talking about right now can sometimes lead to something we call hallucination. This then expresses itself in such a way that a machine provides a convincing but completely made-up answer." (3)

And according to a JAMA health forum, Chatbots struggle to prioritize credibility, even when the information they provide is obtained from published articles:

“Most LLMs are trained on indiscriminate assemblages of web text with little regard to how sources vary in reliability. They treat articles published in the New England Journal of Medicine and Reddit discussions as equally authoritative.” (4)

Bottom line: Healthcare lawyers recommend that physicians use Chatbots to augment, not replace, their professional judgment. (4,5)

2. Technically Inaccurate Clinical Information

Chatbots face a significant challenge in analyzing complex technical topics and applying that knowledge effectively in practical scenarios. Due to their reliance on pre-existing data and algorithms, chatbots often lack the discernment required for understanding intricate details and making informed judgments.

This limitation can result in inaccurate or incomplete responses, ranging from inconveniences to potentially harmful outcomes, particularly in critical domains like healthcare.

One recent analysis of ChatGPT recommendations for managing various medical clinical scenarios found the Chatbot output was not “so incorrect as to cause patient harm” 91% to 93% of the time; However, the level of agreement between the Chatbot's advice and consulting physicians and informatics experts was only 21% -41%. (6) Other studies rated the accuracy of chatbot output (ChatGPT 3.5) to clinical queries between 72-73%. (4,7)

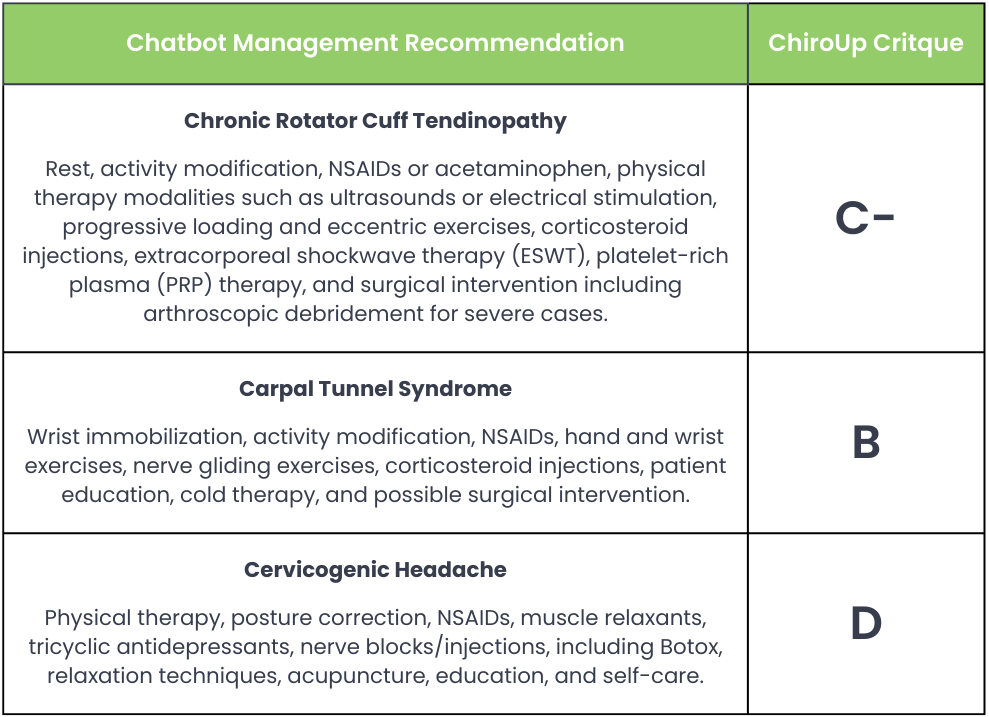

As part of our research, we queried Chatbots and found they equally struggle to define current musculoskeletal best practices. The following chart outlines chatbot responses when asked to list current best practices for three common conditions, along with a subjective grade (A-F) as assessed by members of the ChiroUp advisory board.

The bad news is that Chatbots obviously struggle to rival a knowledgeable evidence-based provider's real-world intellect and reasoning. And that’s the good news too. 😏 Your job is safe!

Efforts are underway to improve chatbots' technical knowledge and analytical capabilities through advancements in natural language processing and machine learning. However, it remains essential to validate their recommendations with human experts when accuracy is crucial, leveraging chatbots as supportive tools rather than relying solely on their outputs.

Bottom line: This JAMA forum advice sums it up best: “We believe that presently, physicians should use LLMs only to supplement more traditional forms of information seeking. Comparing output with reputable…clinical decision support systems can help capture the distinctive value of LLMs while avoiding their pitfalls. For clinical practice guidelines,...reputable online services and stewardship by clinical experts provides some assurance that the recommendations are accurate.” (4)

ChiroUp is exactly that reputable online service that provides you with assurance! Our best practice protocols are built and maintained with the highest quality research and undergo peer review by a team of chiropractic specialists so you can confidently deliver exceptional care.

Want to learn more? See how ChiroUp providers are leveraging ChiroUp to stay on top of the latest evidence-based clinical research.

3. Absent or Incorrect Citations and References

Evidence-based providers rely upon source references to help validate any new information. Unfortunately, most Chatbots lack the ability to provide accurate citations for their output:

“Typically, no list of references is provided by which a physician may evaluate the reliability of the information used to generate the output.” (4)

My Experience With Chatbot Citations

Shortly after Chatbots burst onto the scene, I asked ChatGPT® to “list the most sensitive and specific assessments for an intercostal strain.” With bated breath, I read the response—a list of three tests that sounded reasonable yet entirely foreign to me. Determined to dive deeper, I asked for references to support these tests. And there it was, a jackpot of six citations, even formatted in Vancouver style. Did my life just take an unexpected turn? Had my endless hours of paper sifting and best practice mining been reduced to mere seconds of work?

I was ready to embrace my computer with a hug until reality hit back. A quick Google search failed miserably. The first reference? Nowhere to be found. The second? Vanished into thin air. Every component of those "references" were fabricated, just like any other string of words a chatbot attempts to assemble intuitively.

Disappointing, yes, but it could have been worse. One lawyer who trusted ChatGPT to draft a court filing is now facing sanctions since “Six of the submitted cases [created by ChatGPT] appear to be bogus judicial decisions with bogus quotes and bogus internal citations." (8)

Seasoned Chatbot users can confirm that requesting a citation from most Chatbots usually results in a completely fabricated reference. Fortunately, as Chatbots evolve, this issue will likely improve. Additionally, Chatbot users can increase the likelihood of receiving legitimate citations by asking the Bot to provide URL links for the sources. And some platforms, like Perplexity®, list reference hyperlinks for their content.

Bottom line: Never trust citations supplied by a Chatbot. Always take the time to confirm the accuracy of the citation and the associated content. (9)

4. Lack of AI Chatbot HIPAA Compliance

According to our survey, more than 72% of ChatBot early adopters (frequent and occasional users) used the platform for business-related tasks. But if those tasks include patient data, is ChatGPT HIPAA compliant? Well…even the bot can answer that question:

“While I, as ChatGPT, don't process or store personal data unless explicitly provided by the user during the course of the conversation, it's important to note that conversations with AI systems can potentially involve the disclosure of sensitive information. Therefore, it's crucial to exercise caution and avoid sharing any personally identifiable or sensitive health information during our conversation.” (10)

And a recent Newswires article reinforced the consequences of sharing PHI with a chatbot:

“...accidental PHI inclusion while using non-HIPAA-compliant AI services, such as ChatGPT Plus, can lead to severe HIPAA violations, resulting in hefty fines” (11)

Bottom line: Unless you’re working with a recognized and approved HIPAA-compliant platform, it is crucial to de-identify or anonymize patient data to minimize the risk of PHI breaches.

Is there a HIPAA-Compliant ChatBot?

Are our dreams dead for using Ai to supercharge and automate chiropractic clinical tasks like charting, symptom assessment, diagnosis assistance, and care plan monitoring? Fortunately, several companies, including Google and BastionGPT®, are developing HIPAA-compliant options. (12,13)

The Mayo Clinic and others are testing Google’s Med Palm 2, a HIPAA-compliant AI bot for medical questions. (12) And the bot is apparently performing well:

“Med-PaLM 2 still suffers from some of the accuracy issues we’re already used to seeing in large language models [inaccuracies and irrelevant information] …. Still, in almost every other metric, such as showing evidence of reasoning, consensus-supported answers, or showing no sign of incorrect comprehension, Med-PaLM 2 performed more or less as well as the actual doctors.” (13)

5. Concerns That AI-Generated Text May Lower SEO

Early on, website creators stayed away from Chatbot-generated content after Google’s Search Advocate, John Mueller, declared in 2022 that AI-generated content had been deemed spam and was explicitly prohibited according to their guidelines. (14)

However, Google has since revised its stance on AI-generated content, saying its algorithms would not penalize websites for incorporating it. (14-16)

“Appropriate use of AI or automation is not against our guidelines. This means that it is not used to generate content primarily to manipulate search rankings, which is against our spam policies.” (14)

Bottom line: Use AI generators to create valuable content, but fact-check and proofread meticulously, especially the references.

Conclusion

The world of evidence-based chiropractic practice is rapidly evolving, and Chatbots are becoming increasingly prevalent tools for information dissemination and patient engagement. While Chatbots offer convenience and access to a vast information pool, concerns regarding accuracy, proper citations, and HIPAA compliance must be addressed. Chiropractors need to exercise caution, fact-check information, and validate sources when utilizing an AI Chatbot for healthcare purposes to ensure the highest standard of patient care.

By integrating Chatbots as supplementary tools alongside reputable clinical decision support systems, chiropractors can strike a balance between leveraging the benefits of Chatbots and maintaining the integrity of their professional judgment. The responsible integration of Chatbots certainly has the potential to enhance chiropractic practice and contribute to improved patient outcomes.

Ultimately, AI tools aim to simplify some tasks and make you more efficient so you can do more in less time. Can you guess what’s another tool that thousands of chiropractors use to be more efficient and deliver the highest standard of care?

-

1. Baxter K. Schlesinger Y. Managing the Risks of Generative AI. Harvard Business Review. June 06, 2023. Accessed 07/17/2023 from Link

2. Salesforce.com. IT Leaders Call Generative AI a ‘Game Changer’ but Seek Progress on Ethics and Trust. Salesforce News and Insights. March 6, 2023. Accessed 07/17/2023 from Link

3. More R. Google cautions against 'hallucinating' chatbots, report says. Reuters. Feb 10, 2023.

Accessed on 07/17/2023 at Link

4. Mello MM, Guha N. ChatGPT and Physicians’ Malpractice Risk. JAMA Health Forum. 2023;4(5):e231938. doi:10.1001/jamahealthforum.2023.1938 Link

5. Haupt CE, Marks M. AI-generated medical advice—GPT and beyond. Jama. 2023 Apr 25;329(16):1349-50. Link

6. Dash D, Horvitz E, Shah N. How Well Do Large Language Models Support Clinician Information Needs? Stanford University. March 31, 2023. Accessed on 07/17/2023 at Link

7. Johnson D, Goodman R, Patrinely J, Stone C, Zimmerman E, Donald R, Chang S, Berkowitz S, Finn A, Jahangir E, Scoville E. Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the Chat-GPT model. Link

8. Oconnor L. Lawyer Blames ChatGPT For Fake Citations In Court Filing. HuffPost. May 30, 2023. Accessed 07/17/23 from Link

9. Gewirtz D. How to make ChatGPT provide sources and citations. ZDNET. July 1, 2023. Accessed on July 1, 2023 at Link

10. ChatGPT query by Tim Bertelsman on July 9, 2023.

11. Spencer J. Revolutionizing Healthcare: HIPAA Compliant ChatGPT. Newswires. July 10, 2023. Accessed on July 12, 2023 from Link

12. Kruppa M., Subbaraman N. In Battle With Microsoft, Google Bets on Medical AI Program to Crack Healthcare Industry July 8, 2023 Accessed on July 12, 2023 from Link

13. Davis W. Google’s Medical Ai Chatbot Is Already Being Tested In Hospitals. The Verge. July 8, 2023. Accessed on July 10 2023 from Link

14. Hutchinson A. Google Says that AI-Generated Content is Not Against its Search Guidelines. SocialMediaToday. February 8, 2023. Accessed 7/17/2023 at Link

15. Schoon B. Google confirms AI-generated content isn’t against Search guidelines

Feb 8, 2023. SocialMediaToday. Accessed on 7/12/2023 from Link

16. Proofed.com. Is AI-Generated Content Bad for SEO? March 29, 2023. Accessed 07/16/2023 from Link